Got AI? Better Make it Cybersecurity Domain-Specific

AI is everywhere. I live in San Francisco, and a day doesn’t go by that I don’t see a billboard, an advertisement on the side of a bus, or a tech bro’s hoodie with two big letters on it: AI. It’s no different in cybersecurity marketing – AI terminology is everywhere. But too often, it’s tacked on as a buzzword – a thin layer washed on top of existing security tools, with little real impact. This makes it tricky to decipher what’s real and what’s hype.

When you see AI integrated into a cybersecurity product, a lot of the time it’s a general-purpose LLM (like GPT-5) that’s bolted onto a product as an afterthought. But what good is “generalized AI” for a security tool that is highly specialized to do a very specific job? I like my ChatGPT just fine – but for vacation itinerary recommendations, not in-depth threat analysis, parsing unstructured configuration data, reading JSON outputs, or mapping deviations to my NIST framework.

This is why domain-specific language models are so important in cybersecurity. A domain-specific language model has been trained or fine-tuned on data relevant to a particular field or problem space – in this case, cybersecurity. While a general-purpose LLM understands a wide variety of topics, from cooking to coding, a cybersecurity domain-specific language model is “specialized” in the language, context, and data of the cybersecurity world.

It's the difference between a general doctor who knows a bit of everything about medicine, and a neurosurgeon who’s spent years studying one area in depth. No disrespect to my primary care physician, but he won’t be cracking open my skull and doing brain surgery anytime soon.

If we compare general-purpose LLMs to domain-specific language models, it might look something like this:

Now, in order for a cybersecurity domain-specific language model to truly be a cybersecurity domain-specific language model, it must be trained or fine-tuned on:

- Threat intelligence and threat context (MITRE ATT&CK data, CVEs, threat reports, etc.)

- Incident response playbooks

- Network traffic logs and malware analysis reports

- Configuration data

- Red team / blue team simulation data

- Security policies, frameworks, and regulations (NIST, ISO 27001, SOC 2, etc.)

- An understanding of defensive tool functionality across EDR, firewall, SASE and more

The result? A model that has genius-level intellect in cybersecurity. It “speaks cybersecurity” fluently. It understands acronyms, threat actor behaviors, how your defensive controls work, log file formats, and more. When a highly trained cybersecurity domain-specific model reads a log, it doesn’t just say, “this PowerShell looks suspicious.” It says, “This PowerShell command uses Invoke-WebRequest to download an encoded payload from a suspicious domain. This behavior aligns with MITRE ATT&CK T1059.001 (PowerShell) – commonly used by the APT29 threat group.”

As cybersecurity threats grow more complex and data-heavy, security analysts, security architects, and CISOs need AI that:

- Understands the language of attacks

- Understands the language of the security tools engineered to stop those attacks

- Keeps up with real-time threat intelligence

- Automates the repetitive but context-heavy parts of cybersecurity work

Domain-specific language models can become the force multipliers for cybersecurity teams – not replacing humans, but amplifying and operationalizing their expertise.

Domain-Specific AI to Detect and Correct Defensive Weaknesses

There are many ways to use a domain-specific model in cybersecurity. You can leverage it for post-security event detection, investigation, and response. You can use it to aid threat hunting or analyze threat intelligence.

But what about a more preventative cybersecurity use case? You can use AI to help you detect and correct defensive weaknesses in the form of exposures, misconfigurations, underutilized security tool capabilities, and configuration drift. There are advantages to knowing where you’re weak before an attacker does, and then quickly fixing it. Domain-specific cybersecurity models can help you fully understand your environment and the exposures present, and ideally, automate the remediation process for rapid security hardening.

At Reach, MastermindAI™ is the engine that powers how we solve security problems. Unlike many other security solutions, MastermindAI™ is not a generic LLM bolted onto an existing product. It’s a purpose-built AI engine that analyzes millions of data points across your environment to develop an expert level understanding of your security tools and controls, and an expert level of understanding of the threats you’re facing. It then funnels that genius-level knowledge into domain-specific models, which in turn fuels AI agents that act and remediate on your behalf.

And by the way, unlike a general LLM, a purpose-built cybersecurity domain-specific language model keeps your data private. Models are trained in an isolated, private infrastructure. Customer data sent to Reach, and data at rest, is encrypted. Reach AI relies on verified, domain-specific data to power its configuration engine. This means all data processed by Reach is private and not shared with third parties; nor do third parties interact with Reach.

Here's how it works:

Step 1: Ingest and Understand Your Environment

Our domain-specific language models can integrate with your organization’s:

- Security tools (e.g., EDR, IAM, Firewall, SASE, and email security platforms)

- Threat intelligence feeds

- Ticketing systems and policy documents

Because it’s trained on cybersecurity schemas and tool syntax, it understands things like:

- What a Microsoft Entra ID conditional access policy does

- What a SentinelOne policy means

- What features you should turn on, given your exposure and risk level

In short, it speaks the “dialect” of your environment.

Step 2. Compare Against Known Baselines

Once it knows your environment, it can:

- Compare current configurations to vendor best practices (e.g., CrowdStrike, Palo Alto Networks, Microsoft Defender).

- Reference CIS Benchmarks, NIST SP 800-53, or MITRE D3FEND control baselines.

- Detect configuration drift – deviations from what’s considered “secure” or “standard.”

For instance, let’s say your EDR policy is missing memory scanning. You probably didn’t realize that, but Reach just informed you that based on the vendor’s latest 2025 guidance, enabling this feature could block fileless attacks leveraging PowerShell.

Step 3. Correlate Exposures to Threat Intelligence

Here’s where a domain-specific model shines. It can cross-reference your current exposures with real-world threat data:

“The recent FIN7 campaign exploited endpoints lacking tamper protection in “EDR X” (pick your favorite). Your environment currently has this feature disabled on 42% of devices.”

This creates contextual prioritization: not just what’s wrong, but why it matters right now.

Step 4. Identify Underutilized Tools and Features

By reading tool telemetry, licensing information, and vendor documentation, the domain-specific cybersecurity language model can detect underutilized investments:

“You have licenses for CrowdStrike Falcon Complete but only use base EDR capabilities. Features like USB control and identity protection are not enabled. Based on telemetry, these could have prevented lateral movement seen in recent incidents.”

This helps leadership quantify ROI loss and operational gaps.

From Insights to Action: The Need for AI Agents

From this analysis, MastermindAI generates AI agents designed to work like true teammates for security architects. These AI agents are powered by our domain-specific models that unite this understanding of real-world security data and threat context, with a unique understanding of what your security tools are capable of and how they can be configured to reduce the most risk. These agents proactively and continuously monitor and patrol across every security tool, every control, and every configuration in order to help teams proactively:

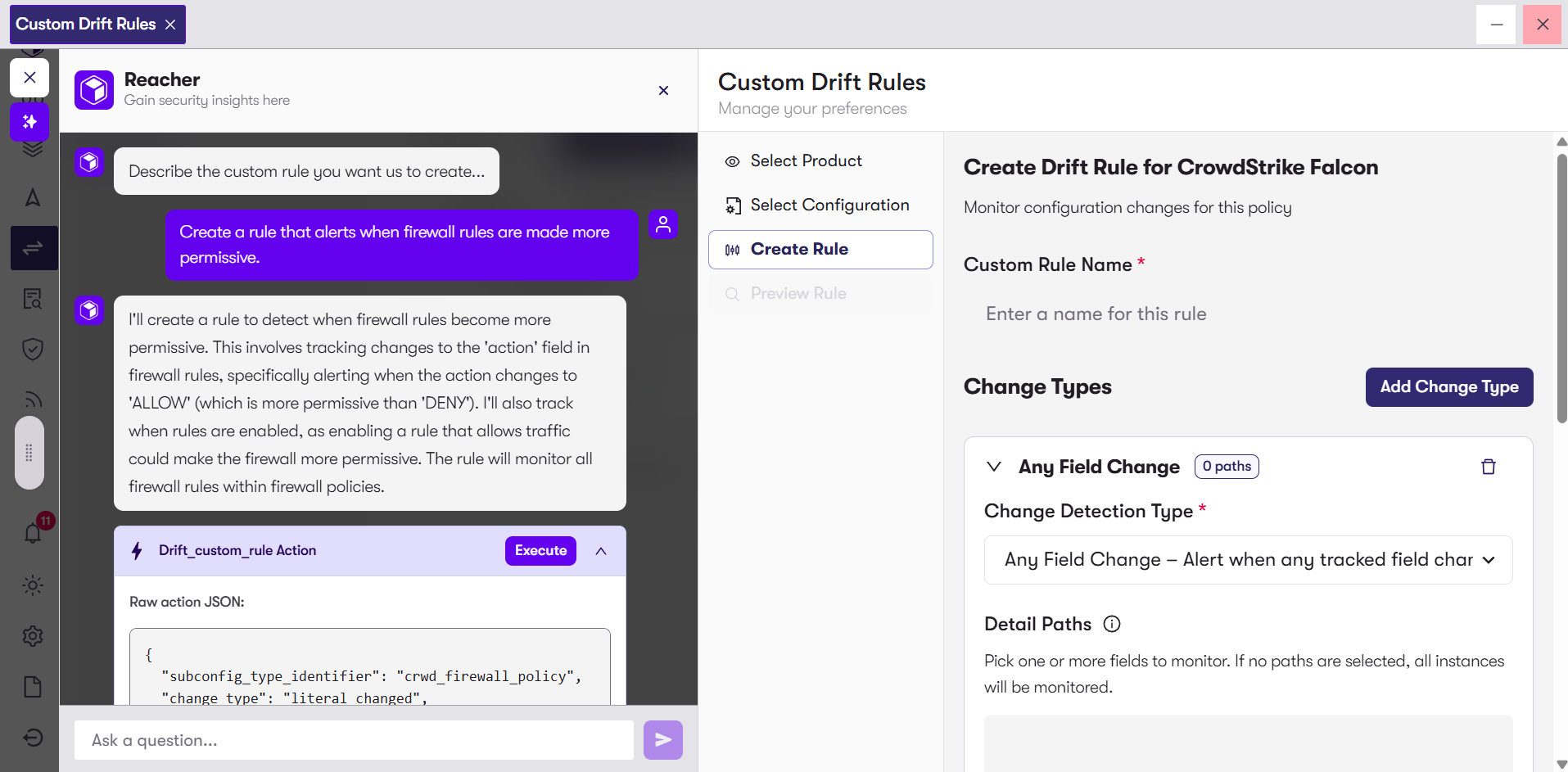

Reach also provides conversational AI support and drift rule generation with Reacher™, our interactive AI agent, which makes security posture accessible in plain language. Whether it’s clarifying exposure details, explaining risk in business terms, or creating custom drift rules for you, Reacher™ helps teams get answers and execute fixes on demand.

Cybersecurity Deserves a Better Class of AI

Domain-specific language models, and the AI agents fueled by them, are essential in cybersecurity. They can function like virtual security architects – continuously reading, comparing, and reasoning across your configurations, telemetry, and vendor intelligence to surface where you’re leaving value (and protection) on the table.

They don’t replace your security team. They amplify them by turning configuration sprawl into actionable exposure intelligence.

More About Reach Security

Reach Security is the first platform that bridges the gap between knowing your exposure and actually fixing it. Security teams are overwhelmed by exposures from misconfigurations, vulnerabilities, and tool sprawl. Most solutions stop at reporting. Reach operationalizes remediation.

If you struggle with manual audits and excel checklists, Reach provides continuous AI-driven posture assessment. If you deal with security tool sprawl and underutilization of security tool features, Reach can help you get the most out of your security investments. If you’re overwhelmed with reactive patching, Reach can provide automated, predictive exposure management. Learn more at reach.security/connect